Processing Drone Photogrammetry Point Clouds for Ground Extraction

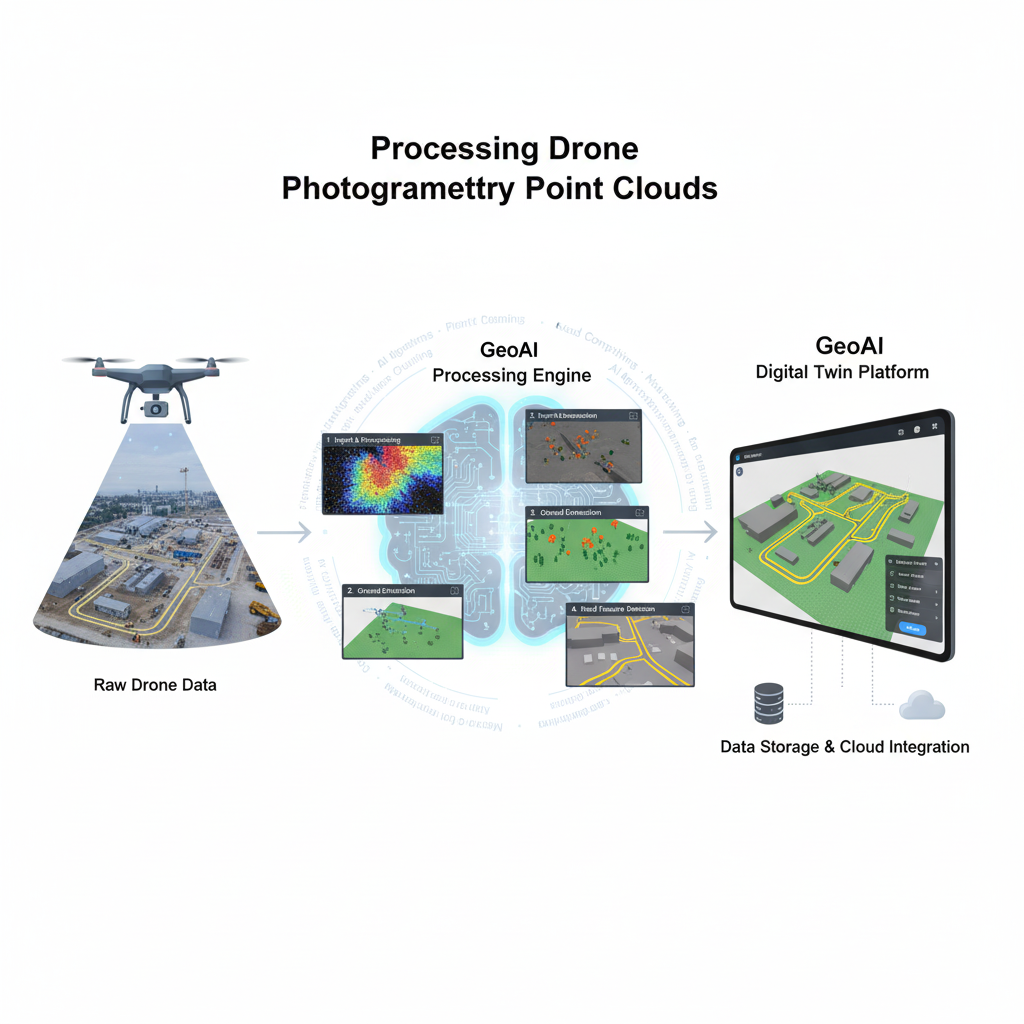

Drone photogrammetry has become one of the most efficient ways to capture high-resolution 3D data of construction sites, roads, and natural terrain. Using drones equipped with cameras, we can generate dense point clouds that represent the real-world surface in remarkable detail. However, raw drone data often includes vegetation, vehicles, and other artifacts that need to be filtered before analysis. At GeoAI, we process these point clouds to extract clean ground points, classify surface features, and deliver data-ready outputs for our digital twin platform.

Drone Photogrammetry Data

A typical drone photogrammetry dataset consists of hundreds or even thousands of overlapping aerial images captured at a specific altitude and flight pattern. These images are processed using photogrammetry software such as Agisoft Metashape, Pix4D, or RealityCapture to produce a dense 3D point cloud, digital surface model (DSM), and orthophoto.

We usually store the point cloud data in formats like LAS, LAZ, PLY, or E57. Each point carries color (RGB), elevation (XYZ), and intensity information. This data provides a detailed representation of the entire scene, including the ground, vegetation, buildings, and other objects.

For accurate processing, we also use ground control points (GCPs) or RTK/PPK positioning to ensure the spatial accuracy aligns with real-world coordinates.

Processing Drone Photogrammetry

At GeoAI, we use a structured workflow to turn raw drone photogrammetry point clouds into clean, labeled datasets ready for analysis and integration into a digital twin.

1. Import and Preprocessing

We start by importing your point cloud into our processing environment. If the dataset is large, we tile it into manageable sections to improve performance. We remove outliers and noisy points using statistical filters to ensure only relevant data remains.

2. Ground Point Extraction

One of the most important steps is to isolate the ground surface. Drone photogrammetry data includes everything the camera sees—trees, vehicles, and temporary objects—so we use algorithms such as the Progressive Triangular Irregular Network (TIN) Densification or Cloth Simulation Filter (CSF) to classify ground and non-ground points.

These algorithms simulate how a virtual cloth drapes over the terrain, identifying the lowest points as the ground. Once filtered, we obtain a clean Digital Terrain Model (DTM) that represents the true earth surface without vegetation or structures.

3. Vegetation and Artifact Removal

After separating ground points, we classify vegetation using color (RGB) and height thresholds. Points with high green channel values and elevation above the terrain are labeled as vegetation. Artifacts like vehicles, construction materials, or scaffolding are identified through intensity variance and shape analysis.

4. Road and Surface Feature Detection

Once the ground surface is defined, we detect and label roads by analyzing slope, roughness, and intensity uniformity. Roads typically appear as flat, continuous regions with minimal height variation. Using AI-based segmentation, we can automatically detect and outline roads, paved surfaces, and open areas.

5. Point Cloud Classification and Simplification

The classified point cloud now contains labeled categories such as ground, vegetation, artifacts, and road. Depending on your needs, we can simplify the ground points to create a lightweight terrain model or retain full resolution for engineering-grade accuracy.

Drone Photogrammetry Processing Output

The final outputs from this process are flexible and tailored to your project’s goals:

- Ground-only point cloud (LAS/LAZ format) for terrain modeling

- Digital Terrain Model (DTM) and Digital Surface Model (DSM) in GeoTIFF format

- Labeled point cloud with class codes for ground, vegetation, road, and artifacts

- Contour lines and slope maps derived from filtered terrain data

- Simplified 3D mesh for integration with BIM or GIS platforms

We can manage and visualize all these outputs through GeoAI’s digital twin platform. You can view real-world conditions in 3D, measure elevation profiles, monitor changes, and plan future work directly from your browser.

Integration with GeoAI Digital Twin Platform

Once processed, your data becomes part of GeoAI’s connected digital twin environment. We upload the cleaned and labeled models to our platform, allowing you to visualize and interact with your site in real time.

You can toggle between terrain layers, identify features such as roads or slopes, and overlay other spatial datasets like LiDAR scans or construction design files. Our tools also enable automated comparison between time-based scans to detect deformation, erosion, or progress over time.

This integration transforms drone photogrammetry data into actionable insights for construction, infrastructure maintenance, and asset management.

Example Workflow

- Receive drone photogrammetry point cloud (LAS/LAZ/PLY)

- Apply noise filtering and ground extraction

- Classify vegetation, road, and artifacts

- Generate DTM, DSM, and contour maps

- Upload results to GeoAI’s digital twin platform for visualization and reporting

Benefit of Drone Photogrammetry with GeoAI

- Efficient processing of large-area datasets

- Automatic filtering and classification using AI algorithms

- Integration with 3D digital twin for interactive visualization

- Support for multiple file formats and project scales

- Expertise in both geospatial analysis and construction applications

Frequently Asked Question about Drone Photogrammetry

Drone photogrammetry is the process of capturing overlapping aerial images using drones and converting them into 3D point clouds, maps, and terrain models.

We can extract ground points using filtering algorithms that remove vegetation and elevated objects, leaving only the true terrain surface.

We accept LAS, LAZ, PLY, E57, and other common point cloud formats generated by photogrammetry or LiDAR software.

Outputs include a cleaned ground-only point cloud, DTM, DSM, labeled vegetation and road layers, and contour maps ready for GIS or CAD integration.

We integrate the processed point clouds data into GeoAI’s digital twin platform, allowing users to visualize, analyze, and monitor real-world conditions in 3D.

Category List

- 3D Point Cloud

- Artificial Intelligence

- Asset Management

- Digital Twin

- Featured

- Hardware

- Knowledge Graph

- LiDAR

- News

- Site Monitoring

- Vegetation Monitoring

- Virtual Reality

Recent Post

- Pavement Defect Detection with GeoAI: Harnessing Laser Scanners and Profilers

- Asset Inventory Mapping with GeoAI: Complete Road Asset Capture Using Laser Scanning and Profiling

- Mobile Laser Scanner and Laser Profiler: Dual Approach to Road Surface Condition Surveys

- Edge Pavement Detection: Using LiDAR and AI for Road Asset Management

- What is Digital Surface Model (DSM)?