How to Choose Point Cloud Processing: Traditional vs. Deep Learning Methods

Point cloud data, generated by 3D scanning technologies like LiDAR, photogrammetry, and laser scanning, has become an essential tool in industries ranging from construction and urban planning to autonomous vehicles and virtual reality. The challenge lies in processing and analyzing this massive, unstructured data. Two main approaches have emerged: traditional point cloud data processing techniques and deep learning methods. But how do you choose between them? In this article, we’ll explore the strengths and limitations of each method to help you make an informed decision.

What is Point Cloud Data?

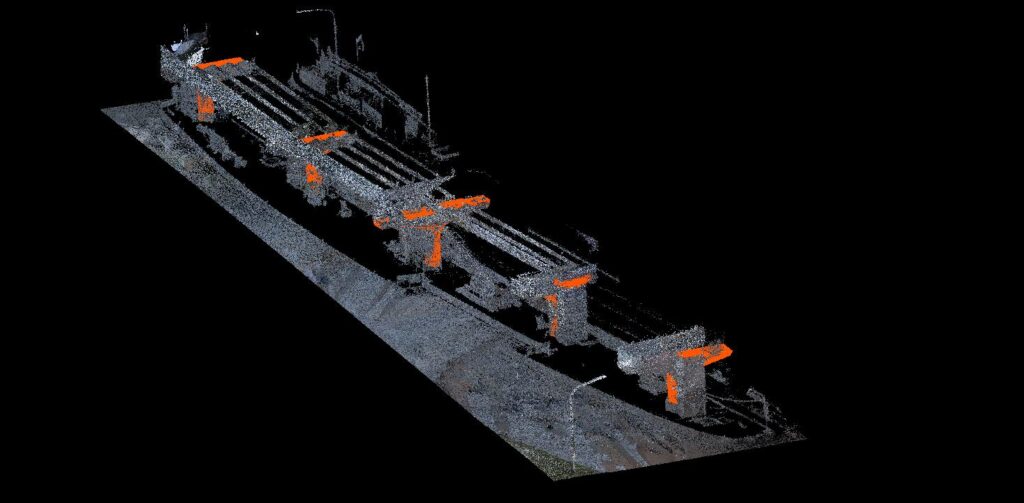

Point cloud data consists of millions (sometimes billions) of individual points in 3D space that represent the surface of objects. Each point has a set of coordinates (X, Y, Z) and often contains additional attributes such as color, intensity, or classification. This data is invaluable for creating detailed 3D models, mapping environments, and analyzing objects in real-world conditions.

However, processing this unstructured and high-dimensional data requires powerful algorithms, and choosing the right approach depends on factors like the complexity of the data, the application, and the desired outcome.

Traditional Point Cloud Processing Methods

Traditional point cloud processing methods rely on algorithms and mathematical techniques that have been developed over decades. These methods are rule-based and often involve geometric analysis, feature extraction, and segmentation techniques.

Key Techniques:

- Filtering and Denoising: Removing noise from the raw data to improve quality.

- Feature Extraction: Identifying key features, such as edges, corners, or planes, within the point cloud.

- Segmentation: Dividing the point cloud into meaningful clusters, such as separating ground points from buildings or trees.

- Registration: Aligning multiple point clouds from different sources into a common coordinate system.

- Classification: Assigning labels to points based on predefined rules, such as classifying points as ground, building, or vegetation.

Strengths of Traditional Methods:

- Mature and Well-Understood: Traditional techniques have been widely researched and used, making them reliable and well-documented.

- Resource-Efficient: These methods typically require less computational power compared to deep learning, making them suitable for environments with limited resources.

- Predictable and Explainable: Traditional methods follow a rule-based, deterministic approach, making the results more transparent and easier to interpret.

Limitations of Traditional Methods:

- Labor-Intensive: Manual parameter tuning and rule definition can be time-consuming and require domain expertise.

- Limited Scalability: Traditional methods may struggle to process very large point clouds or complex environments, such as densely populated urban areas.

- Inflexibility: These methods rely heavily on predefined rules, which may not adapt well to diverse or unstructured data.

Deep Learning for Point Cloud Processing

Deep learning has revolutionized the field of data processing by automating feature extraction and learning patterns directly from data. Unlike traditional methods, deep learning models can be trained to recognize complex patterns and relationships within point clouds without the need for manual feature engineering.

Key Techniques:

- 3D Convolutional Neural Networks (CNNs): These networks extend 2D CNNs to handle 3D data, enabling them to process spatial information within point clouds.

- PointNet and PointNet++: These deep learning architectures are specifically designed for point cloud data, allowing the network to learn directly from unordered point sets.

- Graph Neural Networks (GNNs): These models use graph-based representations to capture relationships between neighboring points, making them ideal for point cloud segmentation and classification tasks.

Strengths of Deep Learning Methods:

- Automated Feature Learning: Deep learning models can automatically learn to extract relevant features from raw point cloud data, reducing the need for manual intervention.

- High Accuracy: Deep learning models often outperform traditional methods in tasks like object detection, segmentation, and classification, particularly in complex environments.

- Scalability: These methods can handle large, high-dimensional datasets and adapt to a wide range of applications, from autonomous driving to virtual reality.

Limitations of Deep Learning Methods:

- High Computational Cost: Deep learning models require significant computational resources, both for training and inference. Access to GPUs and large datasets is often necessary.

- Data-Hungry: Deep learning models need large amounts of labeled data for training, which can be time-consuming and expensive to obtain.

- Black-Box Nature: The results produced by deep learning models can be difficult to interpret, making it harder to understand how the model arrived at its conclusions.

Factors to Consider When Choosing Between Traditional and Deep Learning Methods

1. Project Scope and Complexity

- Traditional Methods: For smaller projects or simpler datasets, traditional methods may be sufficient. If the environment is well-structured (e.g., a flat terrain or a single building), rule-based techniques may work efficiently.

- Deep Learning: If you’re dealing with complex environments, such as cityscapes, forests, or large industrial sites, deep learning’s ability to handle large-scale, unstructured data may make it the better option.

2. Data Availability

- Traditional Methods: If labeled data is scarce, traditional methods are a better fit since they rely on predefined rules and not extensive training datasets.

- Deep Learning: If you have access to a large, well-labeled dataset, deep learning models can deliver superior performance and accuracy.

3. Accuracy Requirements

- Traditional Methods: For tasks where rough approximations or generalized results are sufficient, traditional methods may offer a quicker and less resource-intensive solution.

- Deep Learning: If you require high precision, such as in autonomous driving or detailed architectural modeling, deep learning methods often provide more accurate results.

4. Computational Resources

- Traditional Methods: If you have limited access to high-end hardware or cloud computing resources, traditional methods are generally more feasible.

- Deep Learning: If you have access to powerful GPUs or cloud infrastructure, deep learning methods can leverage this hardware to deliver faster, more robust processing.

5. Long-Term Goals

- Traditional Methods: For one-off projects or short-term needs, traditional methods may be more cost-effective.

- Deep Learning: If you plan to build long-term, scalable solutions or continuously process large amounts of data, investing in deep learning models can provide long-term benefits as models improve over time.

Conclusion

Choosing between traditional and deep learning methods for point cloud data processing depends on various factors, including the complexity of your project, data availability, accuracy requirements, and available resources. Traditional methods are reliable, explainable, and resource-efficient, making them ideal for simpler projects or environments. On the other hand, deep learning methods offer automation, scalability, and superior accuracy, especially in more complex and dynamic environments.

By considering the specific needs of your project, you can select the approach that will provide the most value and ensure efficient and accurate point cloud data processing.

Category List

- 3D Point Cloud

- Artificial Intelligence

- Asset Management

- Digital Twin

- Featured

- Hardware

- Knowledge Graph

- LiDAR

- News

- Site Monitoring

- Vegetation Monitoring

- Virtual Reality

Recent Post

- Photogrammetry vs Image Processing: What’s the Difference and How Are They Used?

- Soil Adjusted Vegetation Index (SAVI): Definition and Application

- NDWI (Normalized Difference Water Index): Identifying Water Bodies with Remote Sensing

- Construction Management in the Digital Era: How GeoAI Transforms Projects with Data Analytics and AI

- What is Near Infrared (NIR)?