Deep Learning for Point Cloud Processing

Unlocking the Power of Deep Learning for Point Cloud Processing: Essential Insights

Point clouds is a 3D points representing the surfaces of objects in real world. It has become ubiquitous in various fields like robotics, autonomous vehicles, and augmented reality. Traditionally, processing point clouds relied on algorithms designed to handle geometric data such as random sample consensus (RANSAC), Hough transform or Iterative Closest Point (ICP) Algorithm. However, the process usually resource intensive and still required manual procedure. The arrival of deep learning has revolutionized the point cloud processing area. It offers more efficient and robust solutions in automatic way. Although it comes with some consequences such as huge data needed for training and testing, the processing of point cloud data using deep learning is becoming inevitable nowadays.

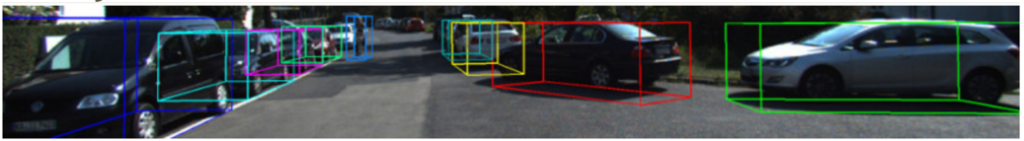

Deep learning for point cloud processing usually applied for autonomous driving for object detection, segmentation, and tracking in 3D space for safe navigation. In robotics area, this method used for scene understanding and navigation in complex environments. Meanwhile, in 3D reconstruction area, we can use the algorithm for scene undestanding and create accurate 3D models from point cloud data. We also can use deep learning processing to enhance immersive experience through detailed 3D environment mapping in Augmented Reality/Virtual Reality.

Before we jump into the process of 3D Deep learning, it is good to know the traditional methods to process 3D point cloud data.

Processing Point Clouds Using Traditional Methods

Traditionally, after data acquisition, processing point clouds involved techniques such as preprocessing, feature extraction, segmentation, and classification or modeling. These methods often relied on handcrafted features and heuristic algorithms, which might lack scalability and robustness. While effective for simple tasks, they may struggle with complex real-world scenarios and diverse datasets.

Preprocessing

Preprocessing aims to clean and prepare the raw point cloud data for further analysis. This includes:

- Noise Removal: Filtering out erroneous points due to sensor inaccuracies or environmental factors.

- Downsampling: Reducing the number of points to make processing more efficient while retaining essential information.

- Normalization: Transforming the data into a common coordinate system or scale.

- Outlier Removal: Eliminating points that are significantly different from the rest of the data set.

Feature Extraction

Feature extraction involves identifying and calculating characteristics that are useful for differentiating between different parts of the point cloud. Common features include:

- Geometric Features: Curvature, surface normals, and roughness.

- Statistical Features: Mean, variance, and covariance of point distributions.

- Topological Features: Relationships between points, such as connectivity and adjacency.

Segmentation

Segmentation is the process of partitioning the point cloud into meaningful regions or objects. Techniques include:

- Region Growing: Starting from seed points and expanding regions based on similarity criteria like distance and surface normal consistency.

- Clustering: Grouping points based on proximity using algorithms like DBSCAN (Density-Based Spatial Clustering of Applications with Noise).

- Model Fitting: Fitting geometric models (e.g., planes, cylinders) to subsets of the point cloud.

Classification or Modeling

This step involves assigning labels to different segments or building a model from the segmented data. Methods include:

- Machine Learning: Using classifiers like Support Vector Machines (SVM), Random Forests, or Neural Networks trained on labeled point cloud data.

- Shape Matching: Comparing segments to known shapes or templates.

- Reconstruction: Creating 3D models or meshes from the segmented point clouds, often using algorithms like Poisson surface reconstruction or Delaunay triangulation.

Processing Point Clouds Using Deep Learning

Deep learning approaches, particularly Convolutional Neural Networks (CNNs) and Graph Neural Networks (GNNs), have emerged as powerful tools for point cloud processing. CNN-based architectures like PointNet and its variants directly operate on unordered point sets, enabling end-to-end learning from raw point cloud data. GNNs, on the other hand, exploit the inherent graph structure of point clouds, facilitating information exchange between neighboring points.

Point Cloud Dataset

To optimize the capabilities of deep learning for point cloud processing, two crucial components are necessary: high-quality datasets and suitable algorithms. Datasets should encompass diverse scenes, objects, and environmental conditions to ensure model generalization. We can use and collect our own dataset by using different method such as laser scanning technology or photogrammetry to produce 3D point cloud data. On the other hand, we can also use public dataset to train the model before use it into our dataset by using transfer learning process. Below are some example of public dataset available.

Object Dataset

- ShapeNet Dataset. The dataset contains richly-annotated, large-scale dataset of 3D shapes such as airplane, chair, bed, table, etc. It is suitable for upsampling project and object detection in 3D point cloud.

- ScanobjectNN Dataset. The dataset contains around15,000 objects that are categorized into 15 categories with 2902 unique object instances. The raw objects are represented by a list of points with global and local coordinates, normals, colors attributes and semantic labels. The object in this dataset including bag, box, bed, cabinets, chair, sofa, etc.

Indoor Scene Dataset

- S3DIS Dataset. Stanford 3D Indoor Scene Dataset (S3DIS) has 6 large-scale idoor areas with 271 rooms. Each point in the scene is annotated with 13 semantic categories such as: ceiling, floor, wall, beam, column, window, door, chair, table, bookcase, sofa, board, and clutter. This dataset is suitable for object detection of point cloud in an indoor scene.

- ScanNet Dataset. ScanNet contains 3D reconstructions of more than 1,500 indoor scenes. The dataset includes over 2.5 million RGB-D frames captured using depth sensors, which provide both color (RGB) and depth information. Each frame is annotated with semantic labels, instance labels, and surface normals. The dataset also includes 3D bounding boxes and camera poses.

Outdoor Scene Dataset

- KITTI Dataset. Karlsruhe Institute of Technology and Toyota Technological Institute (KITTI) dataset contains a scene for traffic dataset with its object such as vehicle, tree, people,, cars, etc. It is one of the most popular dataset used in autonomous driving and mobile robotics. The dataset was recorderd by using high-resolution RGB image, grayscale stereo cameras, and a 3D laser scanner. This dataset is suitable for point cloud object detection in outdoor scene area.

- NuScenes Dataset. NuScenes dataset is a large 3D data collection for autonomous driving research. It includes 3D bounding boxes for 1000 scenes gathered in Boston and Singapore. Each scene spans 20 seconds and is annotated at 2Hz, resulting in 28,130 training samples, 6,019 validation samples, and 6,008 testing samples. The dataset comprises a comprehensive suite of autonomous vehicle data: a 32-beam LiDAR, six cameras, and radars providing full 360° coverage. Additionally, some 3D object detection challenge within this dataset usually assesses performance across ten categories: cars, trucks, buses, trailers, construction vehicles, pedestrians, motorcycles, bicycles, traffic cones, and barriers.

Deep Learning for Point Cloud Algorithms

Deep learning algorithms for point clouds must be specifically designed to handle the irregular and unstructured nature of this data type. Unlike structured grid data such as images, point clouds consist of unordered sets of points in a 3D space, posing unique challenges for deep learning methods.

Some key approaches in Deep Learning algorithm for Point Clouds includes:

Point-Based Methods

- PointNet: PointNet is a pioneering architecture that processes point clouds directly. It treats each point independently using multi-layer perceptrons (MLPs) to extract point features and then aggregates these features using a symmetric function (e.g., max pooling) to achieve permutation invariance.

- PointNet++: An extension of PointNet, PointNet++ incorporates hierarchical feature learning. It uses a nested structure to capture local features at multiple scales, improving the ability to understand fine-grained details in the point cloud.

Convolution-Based Methods

- PointCNN: PointCNN learns an X-transformation to permute the input points into a canonical order, enabling the use of traditional convolution operations. This approach attempts to mimic the advantages of CNNs on regular grids.

- DGCNN (Dynamic Graph CNN): DGCNN constructs a graph from the point cloud, where points are nodes and edges represent relationships based on k-nearest neighbors. Convolutions are performed on this dynamic graph, which adapts during training to capture the local geometric structures.

Voxel-Based Methods

- VoxelNet: VoxelNet divides the point cloud into 3D voxels and applies 3D convolutions. Each voxel contains a small set of points, and point features within each voxel are aggregated before applying the convolution operation. This method leverages the efficiency of convolution operations on regular grids while maintaining some geometric information.

- Sparse Voxel Networks: These networks, such as MinkowskiNet, use sparse convolution operations to efficiently process voxels. It focusing computation on non-empty voxels and significantly reducing memory and computational costs.

Graph-Based Methods

- Graph Neural Networks (GNNs): GNNs represent the point cloud as a graph where points are nodes connected by edges based on spatial relationships. Message passing and aggregation operations are used to learn features that capture the geometric structure of the point cloud.

- EdgeConv: A specific implementation within the DGCNN framework, EdgeConv constructs edge features by computing differences between neighboring points. it allows the network to learn edge attributes that are crucial for understanding local structures.

Training Process

The training process involves feeding labeled point cloud data into the chosen deep learning model. During training, the model learns to extract relevant features, classify objects, segment regions, or perform other desired tasks. In real world, we do not have much data for training. Therefore, we use data augmentation techniques, regularization, and optimization algorithms to enhance the model’s performance and generalization ability.

Testing Process

Once trained, the model undergoes testing on unseen data to evaluate its performance. Testing involves feeding raw or preprocessed point clouds into the trained model and analyzing its predictions. We usually use metrics such as accuracy, precision, recall, and Intersection over Union (IoU) to quantify the model’s performance across different tasks.

Evaluation

Evaluation is crucial to assess the effectiveness and reliability of the trained model. Beyond quantitative metrics, qualitative assessment through visual inspection of results is essential to identify potential shortcomings and areas for improvement. Additionally, benchmarking against state-of-the-art methods provides insights into the model’s competitiveness and real-world applicability.

Takeaway

Deep learning has transformed point cloud processing into faster and more robust approach. It offers different capabilities for tasks such as object detection, semantic segmentation, and scene understanding. As a result, researchers and practitioners can tackle complex challenges in various domains with greater accuracy and efficiency. Finally, understanding the essential aspects of deep learning for point cloud processing, is key to optimize its full potential and driving innovation in this rapidly evolving field.

Category List

- 3D Point Cloud

- Artificial Intelligence

- Asset Management

- Digital Twin

- Featured

- Hardware

- Knowledge Graph

- LiDAR

- News

- Site Monitoring

- Vegetation Monitoring

- Virtual Reality

Recent Post

- Soil Adjusted Vegetation Index (SAVI): Definition and Application

- NDWI (Normalized Difference Water Index): Identifying Water Bodies with Remote Sensing

- Construction Management in the Digital Era: How GeoAI Transforms Projects with Data Analytics and AI

- What is Near Infrared (NIR)?

- Road Management and Infrastructure Planning with Mobile Laser Scanning